Hey everyone, we may have learned that LLMs take it easy over the holidays, Microsoft responded to Google’s Gemini performance metrics, OpenAI announced a partnership with journalists, and an interesting LLM compression white paper was published.

But first, one of our members spoke about AI at the Autonomous Innovation Summit.

Jenny Discusses What LLMs Can Teach About Being Human

Jenny, one of our members, recently spoke at the Autonomous Innovation Summit, discussing how generative AI redefines human behavior and connection.

She has mentioned on Slack that LLMs are human roleplaying machines, and indeed most of us do not anthropomorphize them enough.

GPT-4 Might Be Taking a Winter Break

Speaking of being human, even generative AI takes it easy during the holidays 🎄. This makes sense, when you consider all of the material large language models have consumed about holiday breaks and seasonal depression.

Rob Lynch discovered that GPT-4’s API produces shorter completions when it “thinks” the month is December (orange) compared to May (blue). This is being measured while many users are complaining about GPT-4 getting lazy.

While this sounds too to be true, the differences are statistically significant, and have already been independently reproduced. Some are calling this phenomenon the “AI Winter Break Hypothesis“.

Microsoft Shows GPT-4 Surpassing Gemini with Advanced Prompting

Thank you Dan for sharing this. Microsoft responded to Google’s claim that their large language model Gemini Ultra was superior to OpenAI’s GPT-4.

According to Google, Gemini Ultra was surpassing GPT-4 in 30 out of 32 metrics (some of which were questionable). However, after Microsoft introduced a sophisticated prompt composition strategy called Medprompt, GPT-4 pushed back ahead.

While this certainly shows GPT-4’s latent capabilities, it also highlights how advanced prompting techniques can unlock the full potential of large language models.

OpenAI Partners with Axel Springer to Integrate Journalism in AI

Thank you Branden for sharing this. OpenAI entered a multiyear licensing agreement with Axel Springer, a major news-publishing corporation with brands like Business Insider, Politico, and Bild.

Under the agreement, ChatGPT will link to the original Axel Springer sources when using their material in responses, boosting visibility and traffic for the publisher. The financial details of the deal remain undisclosed, but it promises significant revenue for Axel Springer.

Additionally, the partnership allows Axel Springer to leverage OpenAI’s technology to enhance their journalistic products. While the deal isn’t exclusive, it sets a precedent for future interactions between AI developers and news organizations, addressing the industry’s concerns over AI-driven content usage without proper attribution or compensation. This agreement follows a similar, though more limited, arrangement between OpenAI and The Associated Press.

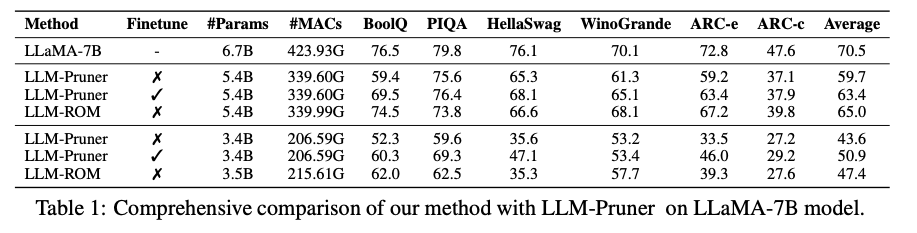

New LLM Compression Technique Obviates Need for GPUs

A white paper came out this week introducing LLM compression based on reduced order modeling, which entails low-rank decomposition within the feature space and re-parameterization in the weight space.

This technique obviates the need for a GPU device, and the associated computation cost is quite small. Their LLM-ROM method outperforms LLM-Pruner at 80% and 50% compression without any fine-tuning.

Member Tools & Resources

David shared a couple libraries and projects for Open Source LLM integrations, namely ‘AutoGen.’